To the average person, uranium enrichment is something that feels too scientifically complex to understand. People typically only hear about enrichment in conjunction with a myriad of other advanced-sounding concepts, which can be off-putting for someone who would have otherwise wished to better understand this process. However, at a fundamental level, the enrichment process is actually quite simple, with the primary objective being that we wish to separate different kinds of uranium that are mixed together when it is mined from the Earth. At face value, this does not feel like a complicated task, but these two different types, or isotopes of uranium are practically identical in every physical way except for their weight, and the fact that one isotope of uranium is fissile. One isotope is called 238U, and it is mostly useless for the purposes of energy generation or nuclear weapons, but comprises 99.284% of all uranium that exists on Earth. The other isotope, 235U, is the one that we desire, because it can produce what we think of as a nuclear reaction. This is the basis for why nuclear power plants work and the basis for why nuclear weapons that use uranium explode. However, 235U, the useful isotope, only comprises 0.711% of all the uranium on Earth, and is homogeneously mixed within the 238U in uranium ore. It turns out that these two different types of uranium are incredibly difficult to sort, as there is only about a 1% difference in atomic weight between them, thus mankind continues to search for better, cheaper, faster ways to separate them. Recently, a technology has emerged that makes the sorting of these atoms much faster, which, in the context of nuclear proliferation, can be a bad thing.

The refinement of technology throughout civilization has followed a consistent pattern; machines become easier to use, more efficient with respect to resource consumption, faster at completing the process they were designed to perform, and for all these reasons, cheaper to operate. This is exactly what has happened with the technology that people have developed to enrich uranium over the past eighty years. The Manhattan Project saw the advent of the “gaseous diffusion” enrichment process, which was the most technologically advanced and cheapest process for separating uranium at that time. Gaseous diffusion was eventually replaced with the “gas centrifuge” enrichment process, which was much faster and could perform the same amount of separative work while consuming far less electricity. Centrifuge enrichment remains the primary enrichment process throughout the world now, but today we are at another crossroads, with lasers looking to take the enrichment industry by storm; bearing promise of much quicker uranium enrichment while supposedly using even less electricity to do it once the process has been optimized. People are getting better and better at sorting atoms.

ENRICHMENT METHODS

The gaseous diffusion process was cutting edge technology in the 1940’s, and operated on a very simple premise. The idea was to somehow turn uranium into a gas and push it through filters that could block the passage of the comparatively larger 238U atoms, while letting the smaller 235U atoms through at a faster rate. Scientists, engineers, and chemists succeeded by combining uranium with fluorine in a uranium-hexafluoride (UF6) compound[1], and then forcing this gas through advanced membranes that solved the problem of sorting (U.S. Nuclear Regulatory Commission). The end result was low-enriched uranium that could be used for nuclear energy generation, but if that low-enriched uranium was cycled back through the system many more times, you could ultimately yield uranium that was enriched to the point that it was almost entirely 235U. This is called highly-enriched uranium (HEU), and it is what is used in nuclear weapons. The gaseous diffusion process provided a means for governments to enrich uranium, but this process is really quite slow and uses a tremendous amount of electricity. These drawbacks prompted the advent of the next epoch of enrichment technology.

The centrifuge enrichment process completely changed the enrichment industry. By injecting the aforementioned UF6 gas into a centrifuge that is rotating at a very fast speed, the lighter 235U will tend to collect at the axis of rotation and also at the top of the cylinder, allowing it to be discriminately captured, while the heavier 238U will collect on the perimeter and bottom of the cylinder (U.S. Nuclear Regulatory Commission). This explanation makes this process sound deceptively simple, but in reality, these centrifuges are rotating at such a great speed (typically 50,000-70,000rpm) that they push the physical limits of the aluminum, steel, or carbon fiber components that they are made of. Regardless, these material’s problems were ultimately overcome, allowing processors to enrich uranium much faster while using about fifty times less electricity than the gaseous diffusion process to produce the same amount of material at the same level of enrichment (Cameco Corporation).

The invention of and later public arrival of lasers in the 1960’s prompted Los Alamos researchers to theorize a decade later that a finely tuned laser could be used to separate isotopes of uranium, but it wasn’t until the 21st century that this technology was effectively commercialized (SILEX Systems). Two different methods using lasers for enrichment purposes were developed: Atomic Vapor Laser Isotope Separation (AVLIS), and Molecular Laser Isotope Separation (MLIS). AVLIS relies on imparting an electrical charge on the individual uranium atoms by exciting the atom so much that it loses an electron and is ionized. The laser is able to discriminate between 235U and 238U due to the different atomic weights giving each atom a different spectral absorption band, much like an atomic resonant frequency. By precisely tuning a solid-state laser with a Nd-YAG lasing medium, one can induce the 235U atoms to absorb the energy, and the 238U atoms to effectively ignore it. This is no small feat, however, as it requires a laser wavelength so precise that it needs to be accurate to a single angstrom.[2] A negatively charged plate awaits the uranium vapor after it has been lased, which then captures the positively charged 235U (U.S. Nuclear Regulatory Commission).

Despite the viability of AVLIS, MLIS seems to have emerged as the method of choice for commercialization of laser enrichment.[3] The idea of using lasers for sorting isotopes was conceived at Los Alamos National Laboratory in the 1970’s, but SILEX Systems, an Australian company, is widely regarded as the commercial pioneer in this field (Los Alamos National Laboratory). SILEX began work on the process in the 1990’s and eventually licensed the technology to General Electric and Hitachi who are currently in the process of constructing a test facility akin to a large scale proof-of-concept (SILEX Systems). Fundamentally, MLIS shares many similarities with the AVLIS process, though there are some important distinctions. With the MLIS process, the uranium is processed as the UF6 compound, just as it is in conventional enrichment methods. The aim is to induce a chemical change within the compound by breaking the molecule in a specific way, and MLIS can do this by utilizing two different lasers sequentially. The first excitation laser typically uses a carbon-dioxide lasing medium, which outputs light in the infrared spectrum and is optically tuned to exactly 16μm. This is the frequency at which it will selectively excite the 235U atoms, generating atomic vibration and producing a state of general volatility in the molecule. After the UF6 molecules pass through the infrared laser, they then pass through the beam of a second laser which serves to break only the UF6 molecules that contain 235U with a quick, high-energy pulse of light tuned to a wavelength in the absorption spectrum of 235U (U.S. Nuclear Regulatory Commission). A good analogy for this process is the classic TV trope in which a character sings so loudly and at such a high pitch that wine glasses and windows begin to break after some time. The laser is effectively exploiting the resonant frequency of the excited molecules, and when it does, an electron that the uranium is sharing with any of the fluorine atoms (recall that the compound is UF6, a single uranium atom bonded to six fluorine atoms) breaks away from the molecule; this is called disassociation (Jensen, Judd and Sullivan). What remains is UF5 and a free fluorine atom, and the immensely useful property of UF5 is that it exists as a solid under the same pressure and temperature that UF6 exists as a gas! The UF5 immediately precipitates out of the UF6 gas as a powder, allowing for conventional filtration methods to physically separate it from the feedstock. All the while, a scavenger gas such as methane captures the free fluorine atom to prevent re-fluoridation of the UF5 (U.S. Nuclear Regulatory Commission). This UF5 precipitate contains a disproportionately larger amount of the 235U isotope than natural uranium ore, thus we can say that it has been enriched.

Aside from the fact that this process is impressively innovative and an elegant solution when it comes to sorting uranium atoms, it is also much faster. To compare the three different enrichment processes, we will look at the typical separation factor[4] for each technology:

| Technology |

Separation Factor |

# Stages for LEU (4%)[5] |

# Stages for HEU (90%) |

Req. Facility Size |

| Gaseous Diffusion |

1.004 |

440-1760 |

1800-7000 |

Large |

| Gas Centrifuge |

1.05 – 1.2 |

10-36 |

39-145 |

Moderate |

| Laser Separation |

2 – 6[6] |

1-2 |

4-10 |

Small |

What the above table tells us is that for a single kilogram of uranium to be enriched to the point that it is usable fuel in a nuclear power plant (assumed to be 4% 235U in this example), it would have to either pass through 440-1760 separation stages of membranes/diaphragms in a gaseous diffusion facility,[7] 10-36 separation stages of centrifuges (range due to differences in efficiencies between different models), or pass through an AVLIS/MLIS system one to two times. Similarly, it would take only 4-10 passes through an AVLIS/MLIS device to generate weapons grade uranium (assumed to be 90% 235U in this example), compared to 39-145 stages of centrifuges or 1800-7000 stages of gaseous diffusion. Depending on the throughput of each system, i.e. the volume of feed material that a system can process over an interval of time, AVLIS/MLIS is likely an order of magnitude improvement in enrichment speed over centrifuge enrichment; a true game changer. Furthermore, AVLIS/MLIS prevents 234U contamination of the enriched product, whereas gaseous diffusion and centrifuge enrichment actually exacerbate 234U contamination due to its lighter atomic weight (U.S. Nuclear Regulatory Commission). 234U is not desired in the final product, because just like 238U it is not capable of undergoing fission. Given these vast advantages that AVLIS/MLIS has over traditional enrichment methods, it is easy to see why it is such an attractive option for any entity that wishes to enrich uranium.

LASER ENRICHMENT AND PROLIFERATION

Some may read this and ask, ‘does this ground-breaking technology change the dynamics of the ongoing international push against nuclear proliferation?’ This question is really just analogous to asking if there would be more nuclear weapons states if it were easier to covertly manufacture nuclear weapons. Personally I believe the answer is yes, though I admit the answer is speculative. The biggest obstacle of nation-states that have aspirations to manufacture nuclear weapons at this point is the risk they assume when they build an enrichment facility, as they are taking a chance that U.S. satellites will discover it and subsequent questions will be raised. What has changed is that these enrichment facilities no longer have to be large, as AVLIS/MLIS is a much more compact system.

AVLIS and MLIS both use a comparatively much smaller footprint than centrifuge and diffusion methods when speaking of the required infrastructure associated with the technologies. Centrifuges used in uranium enrichment are truly massive devices, with some advanced carbon fiber designs up to forty feet tall and and two feet in diameter, and a typical facility would house several thousand of them (Glaser). Gaseous diffusion plants are notoriously enormous and bulky, requiring large cooling systems and would typically, at any given time, be using hundreds or even thousands of megawatts of power as they try to force their uranium gas through the aforementioned membranes (Centrus Energy). Both of these types of facilities are much easier to identify from satellite imagery because of their size and unique features. Laser enrichment, however, allows for the capability to quickly produce an appreciable amount of weapons-grade fissile material in a small facility that is correspondingly difficult to detect. There is no requirement for any particularly large cooling systems, and though these systems do use a large amount of power compared to similarly sized civilian facilities, it’s not such a remarkably large amount of power that it requires substantial electrical infrastructure that is easily identified (U.S. Nuclear Regulatory Commission). This means that it will be much easier to hide enrichment infrastructure for countries that wish to trudge down the nuclear path. Though it is generally desired to reduce the size and increase the efficiency of practically any machine humans have ever invented, the “bulkiness” of conventional enrichment infrastructure happens to be useful because it’s distinctive and easier to detect.

It is already challenging enough to prevent the proliferation of nuclear weapons. It is fundamentally in every state’s interest to obtain one, because nuclear weapons function as a strong “final-word” to any sort of serious foreign-relations quarrel. Look to the decade long dispute between the United States and Iran regarding the Iranian nuclear program. This particular chronology serves to illustrate how difficult it is to reverse the course of an advanced nuclear program once it is already in place. Over ten years elapsed before a multilateral agreement with Iran was reached, and many people in both countries are still not satisfied with the result. The global community is also currently dealing with a seemingly aggressive, nuclear armed North Korea, with no particularly great solution in sight. In a hypothetical world where one could instantly obtain weapons grade fissile material by putting uranium ore into some kind of magical enriching machine, there is no doubt that every nation would have vast stockpiles of it. Obviously we do not live in that hypothetical world, but it does stand to reason that if enriching to weapons-grade concentrations of 235U is made easier in any way, more of it will be produced. Guns were, at one point in history, very difficult and time consuming to manufacture, and for that reason they were a rarity. That is, of course, not the case anymore. The introduction of AVLIS/MLIS prompts us to ask ourselves if, collectively, civilization is mature enough for the proliferation rapid enrichment capabilities.

Furthermore, enriched uranium is already quite cheap. This is especially apparent when one looks at how much of the generated electricity cost is due to the initial fuel costs for different power generation technologies. For instance, a study performed several years ago in Finland compared nuclear with gas, coal, and wind power, and quantified how many cents of a nuclear generated kilowatt-hour went to fuel costs, i.e. uranium ore and the cost to process and enrich it. For nuclear power, only 11.4% of the cost of a kilowatt-hour can be attributed to fuel costs, whereas this number is 59.7% for natural gas, and 25.5% for coal. This means that even if nuclear reactor operators were able to obtain their enriched uranium for free, the price of a kilowatt-hour produced by a nuclear reactor would only hypothetically fall from 2.37 to 2.10 ; as capital costs and operations and maintenance expenses comprise almost all of the costs associated with producing nuclear power (World Nuclear Association). Perhaps electricity producers would be able to scrape a small amount of profit from the increased savings on enrichment by using lasers, but it is doubtful that any of these savings will be passed onto consumers. In other words, if there is any energy generation technology that really needs to increase the efficiency of their fuel acquisition process, it’s certainly not nuclear. The emergence of cheap natural gas has, to some extent, necessitated innovation and cost reducing measures in the nuclear industry to ensure that it remains competitive, but the security concerns associated with rapid enrichment seem to outweigh the benefits.

INTERNATIONAL REGULATORY SOLUTIONS

At this point, there is no turning back the clock on AVLIS/MLIS. The genie is out of the bottle. The very fact that I was able to write this research paper with some degree of technical accuracy regarding the laser enrichment process is testament to that. The technical specifications of the SILEX process are technically classified by the U.S. government, but there is enough knowledge available on the Internet alone for any large team of physics, chemistry, and engineering PhDs with government level funding to set up a laser enriching facility and eventually get it working through some trial and error. In other words, though this is highly advanced technology, it is not as if it is out of reach for modernized countries like Saudi Arabia, Turkey, or Egypt to do themselves.

There is the possibility that the International Atomic Energy Agency (IAEA) could impose an international regulation on the usage of lasers for uranium enrichment purposes. It is plausible that a regulation could ban the operation of industrial scale, multi-kilowatt lasers being operated at certain wavelengths in the absorption spectra of the 235UF6 molecule or the 235U atom, but this presents a few issues. First, humanity has advanced its understanding of lasers to such an extent that, on a global scale, it is somewhat commonplace to find people who are familiar with laser tuning. Moreover, there are now several different readily employed methods one can use to achieve a highly specific wavelength in a relatively short amount of time.[8] Tuning a laser into or out of a specific wavelength is by no means an easy thing to do, but it is not prohibitively difficult for an individual who has studied photonics. This would largely prevent the IAEA from ever being able to catch someone using a laser for uranium enriching purposes “red-handed,” and this is not to mention the complexity of wavelength testing, especially in a closed system.

Secondly, the AVLIS/MLIS processes have applicability and usefulness outside of the nuclear power and weapons industries. These processes can be utilized for purposes of purifying radioisotopes that are used in the medical industry (Eerkens, Kunze and Bond). Regulation could make this peaceful application much more troublesome, as teams would presumably be forced to shutdown their operation and/or dismantle their systems for periodic inspections. Third, if the United States were to become the country that ultimately called for international regulation of industrial scale lasers that could be used for isotope separation, there is the diplomatic implication of hypocrisy. The U.S. is one of only a handful countries that have explored this technology, and is the only country currently constructing a commercial scale laser enrichment facility. Many countries already resent the U.S. for seemingly not making any meaningful reduction in its nuclear weapons stockpiles (Wan). Thus, the acquisition of and subsequent call for no other country to use an AVLIS/MLIS system will only serve to intensify this sentiment.

CONCLUSIONS

Technological innovation is a good thing that all of humanity can harvest benefits from. The average individual would be hard-pressed to identify practically any instance in which new technology made all of global society worse off. However, technology related to the advancement of weapons is an obvious exception, and it can easily be argued that the uranium enrichment industry falls into this category. It is unique in the sense that the technology enrichers employ could be gravely harmful if it were to fall into the wrong hands, and AVLIS/MLIS most certainly will at some point in the future. There is no doubt that the AVLIS/MLIS processes have peaceful and otherwise useful applications outside of the nuclear energy industry, but if we are to embrace this technological process, we must also come to terms with, and accept the accompanying risks of provoking further nuclear weapon manufacturing capabilities.

It is troubling to argue against such a state-of-the-art and an elegant solution to the problem of sorting uranium atoms. Laser isotope separation is a triumph of human innovation, and a triumph of modern science. However, it becomes morally paralyzing when trying to reconcile the advancement of chemistry and physics as academic disciplines with the potentially harmful real world applications of the innovations themselves. For this reason, I believe that one can rationally appreciate the scientific achievement of inventing and demonstrating this process without simultaneously advocating for its use. The fact that nuclear weapons were invented is a testament to humanity’s scientific progress, ingenuity, and intellect, but that doesn’t mean that nuclear weapons were or are a good thing.

Though rapid uranium enrichment presents a potential threat to nuclear proliferation, one can take solace in the fact that it is arguably one of the most technologically advanced processes on Earth, and that the intellectual capital and resources required to make it work can only be found at the governmental level in relatively advanced nations. This means that we do not have to worry about a dubious group of terrorists producing weapons grade uranium using lasers anytime soon. Furthermore, it is useful to remind oneself that even if an independent collective produces weapons grade uranium using a covert laser enrichment facility, one still has to build a bomb around the core. That is essentially just as difficult as building the laser to enrich the fuel. Beyond that is the requirement that this group also still has to build a delivery vehicle, such as a cruise missile that has satellite guidance, which is just as, if not more, difficult as building an AVLIS/MLIS system and a nuclear bomb. The point of saying this is to underscore the point that the laser enrichment process does not suddenly give every government the ability to produce and deliver a nuclear weapon. My aim is not to fear monger. My point is that it changes the calculus of that ability. It makes a relatively meticulous, bulky, expensive, identifiable process more efficient, more compact, less costly, and more clandestine. This is the reason that I believe we should focus on preventing the proliferation of this technology.

-Tyler

FOOTNOTES

[1] Uranium-hexafluoride is particularly useful because it sublimes from a solid to a gas at a remarkably low temperature of 133°F.

[2] An angstrom is equal to one ten-billionth of a meter.

[3] This is largely because it is far easier to work with UF6 as a gas than it is to vaporize uranium metal.

[4] Defined as the quotient of the ratio of isotopes (235U/238U) after a stage of separation and the ratio of isotopes prior to separation.

[5] Number of separation stages required for 4% enrichment was calculated by using the separation factor of each respective technology sourced from the three cited U.S. Nuclear Regulatory Commission publications. Starting from natural uranium and separating to 4% could be achieved in a single stage if the technology had a separation factor of at least 5.82. From there, one can simply evaluate logx(5.82) where x is equal to the separation factor of the technology. The output of this log is the approximate number of stages that particular technology would require to reach a 4% concentration of 235U. The following HEU column is calculated in the same way, logx(1256.83).

[6] The separation factor of MLIS and AVLIS has been reported as anywhere from two to six. The technology is still largely in the developmental phase, and it is likely that a commercialized version of the technology will be able to achieve efficiencies towards or exceeding the upper end of this range.

[7] LEU at 440 stages in a gaseous diffusion plant is the theoretical minimum at a 1.004 separation factor, but many plants, like the plants in Portsmouth or Paducah, required up to four times this amount when they were still in operation.

[8] Perhaps the most common is the introduction of a specific dye into the lasing medium, hence the term “dye laser,” though there are other methods, such as simply shifting the output wavelength optically.

REFERENCES

Cameco Corporation. Cameco U101 Fuel Processing: Enrichment. 2016. 20 April 2016. <https://www.cameco.com/uranium_101/fuel-processing/enrichment/>.

Centrus Energy. Paducah Gaseous Diffusion Plant. 2013. 20 April 2016. <http://www.centrusenergy.com/gaseous-diffusion/paducah-gdp>.

Eerkens, Jeff, Jay Kunze and Leonard Bond. “Laser Isotope Enrichment for Medical and Industrial Applications.” 14th International Conference on Nuclear Engineering. Miami: Idaho National Laboratory, 2006. 1-13.

Glaser, Alexander. “Characteristics of the Gas Centrifuge for Uranium Enrichment and Their Relevance for Nuclear Weapons Proliferation.” Science and Global Security 2008: 1-25.

Jensen, Reed, O’Dean Judd and Allan Sullivan. “Separating Isotopes with Lasers.” Los Alamos Science December 1982: 2-33.

Los Alamos National Laboratory. Los Alamos Science No.4 – Winter/Spring 1982. December 1982. 1 May 2016. <http://la-science.lanl.gov/lascience04.shtml>.

SILEX Systems. SILEX History. 2016. 28 April 2016. <http://www.silex.com.au/History>.

U.S. Nuclear Regulatory Commission. “Uranium Enrichment Processes: Gas Centrifuge.” 21 October 2014. USNRC Technical Training Center. 6 May 2016. <http://pbadupws.nrc.gov/docs/ML1204/ML12045A055.pdf>.

—. “Uranium Enrichment Processes: Gaseous Diffusion.” 21 October 2014. USNRC Technical Training Center. 4 May 2016. <http://pbadupws.nrc.gov/docs/ML1204/ML12045A050.pdf>.

—. Uranium Enrichment Processes: Laser Enrichment Methods (AVLIS and MLIS). 21 October 2014. USNRC Technical Training Center. 1 May 2016. <http://pbadupws.nrc.gov/docs/ML1204/ML12045A051.pdf>.

Wan, Wilfred. “Why the 2015 NPT Review Conference Fell Apart.” 28 May 2015. United Nations University Centre for Policy Research. 2 May 2016. <http://cpr.unu.edu/why-the-2015-npt-review-conference-fell-apart.html>.

World Nuclear Association. The Economics of Nuclear Power. March 2016. 3 May 2016. <http://www.world-nuclear.org/information-library/economic-aspects/economics-of-nuclear-power.aspx>.

If you would like to support my work and want me to be able to afford Top Ramen (or maybe even Mellow Mushroom pizza if you all are extraordinarily generous) while I’m working on these statistics, please click this link to donate to

If you would like to support my work and want me to be able to afford Top Ramen (or maybe even Mellow Mushroom pizza if you all are extraordinarily generous) while I’m working on these statistics, please click this link to donate to

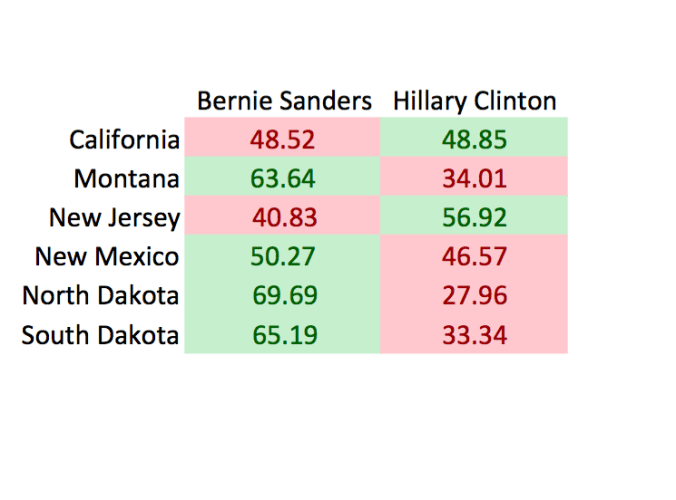

West Virginia is 3.4% African American, similar to states like Washington, Nebraska, and Colorado, all of which Hillary Clinton lost by margins that were quite large. But, there are other factors that point towards a <10% margin of victory. First, the relative search interest between the two candidates is leaning Hillary in West Virginia. The average relative search interest in all of the states that Bernie has won thus far is 2.23, whereas in West Virginia the relative search interest is 0.94; meaning that West Virginians are actually searching for Hillary Clinton more than they are Bernie Sanders. In fact, the lowest his three day relative search interest has ever been in a state that he ultimately won was 1.54 in Minnesota, and just last week in Indiana the relative search interest was 1.66. Secondly, Bernie’s share of Facebook Likes in West Virginia is only 75%, compared to an average of 81.2% for all of the states that he has won. I know that 6.2% doesn’t sound like much, but it really does make a big difference when you consider that the entire range of values for this measure is between 63.6%-95% (Mississippi-Vermont). West Virginia is also a relatively old state, with a median age of 41.9, compared to 37.78, which is the average median age of all the states that he has won.

West Virginia is 3.4% African American, similar to states like Washington, Nebraska, and Colorado, all of which Hillary Clinton lost by margins that were quite large. But, there are other factors that point towards a <10% margin of victory. First, the relative search interest between the two candidates is leaning Hillary in West Virginia. The average relative search interest in all of the states that Bernie has won thus far is 2.23, whereas in West Virginia the relative search interest is 0.94; meaning that West Virginians are actually searching for Hillary Clinton more than they are Bernie Sanders. In fact, the lowest his three day relative search interest has ever been in a state that he ultimately won was 1.54 in Minnesota, and just last week in Indiana the relative search interest was 1.66. Secondly, Bernie’s share of Facebook Likes in West Virginia is only 75%, compared to an average of 81.2% for all of the states that he has won. I know that 6.2% doesn’t sound like much, but it really does make a big difference when you consider that the entire range of values for this measure is between 63.6%-95% (Mississippi-Vermont). West Virginia is also a relatively old state, with a median age of 41.9, compared to 37.78, which is the average median age of all the states that he has won.